Trained networks can be used in View and Segment (PSEG) tools for the segmentation or classification of input images with the same characteristics as the training images. Please apply the same preparations including associations and cropping VOI definition as for the training samples.

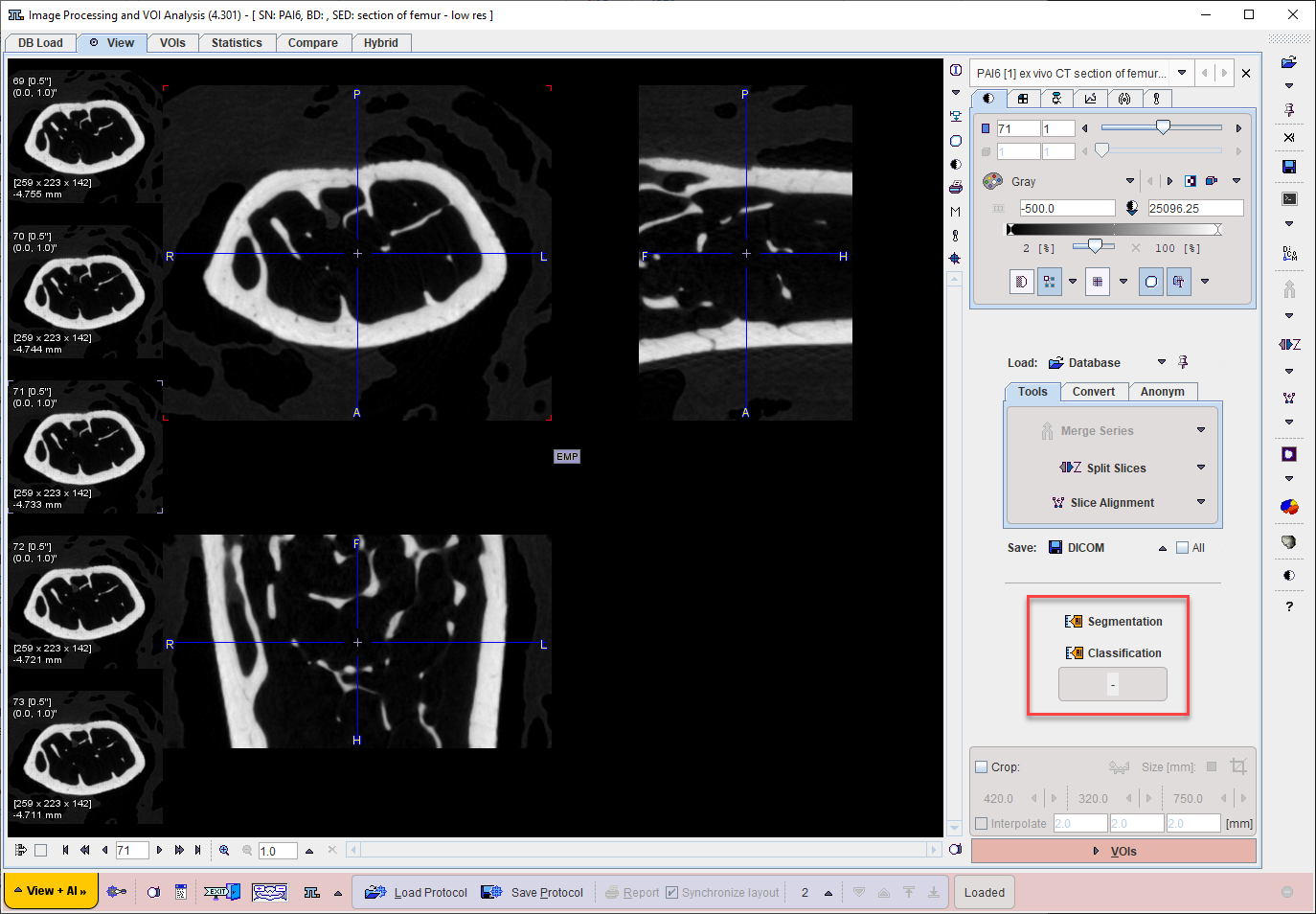

AI-based segmentation in View tool

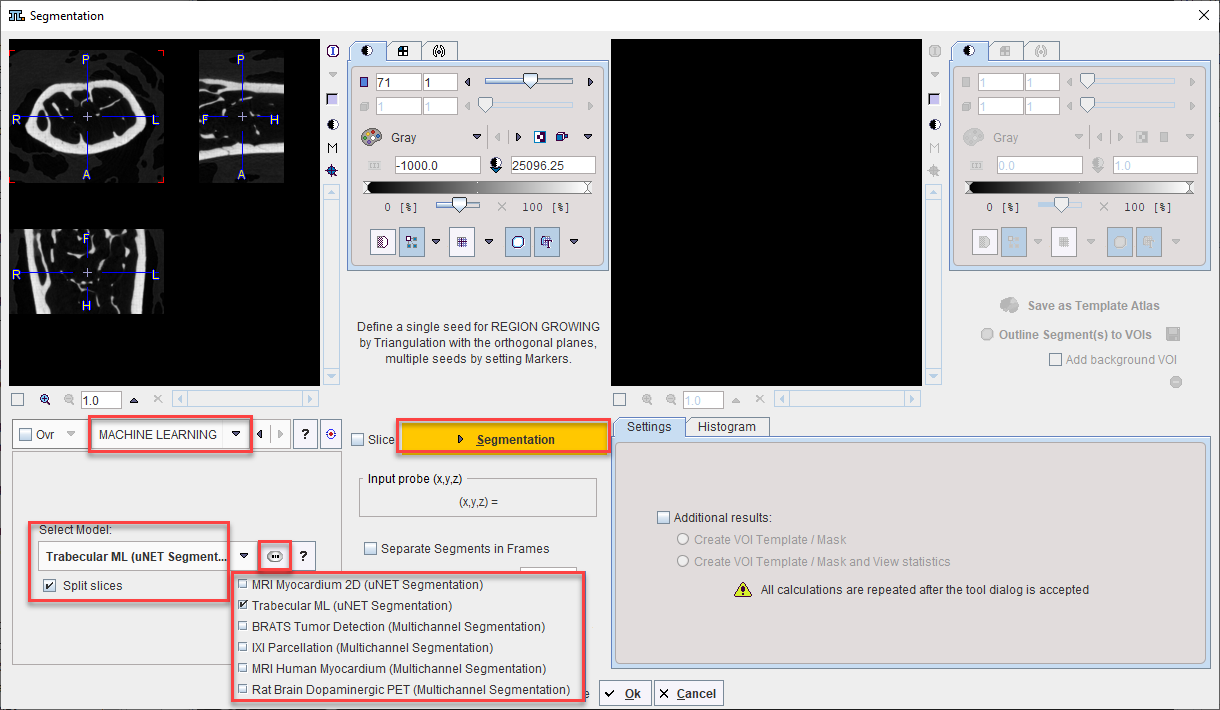

A shortcut to the Segmentation interface with MACHINE LEARNING segmentation method is available on the View tab for the data currently loaded. Alternatively the Segmentation tool may be opened from the Image Processing Tools (for segment generation) or VOI Tools (for direct VOI generation without segment) and MACHINE LEARNING selected from the list of segmentation methods on the left:

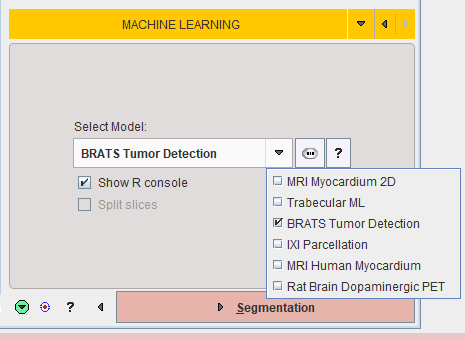

Following the selection of the MACHINE LEARNING segmentation method the trained model should be selected from the Select Model list. Trained models available in resources/pai are shown with naming according to the weights folder and architecture used.

Additionally, self-trained models saved in the database can be selected using the AI Learning Set browser:

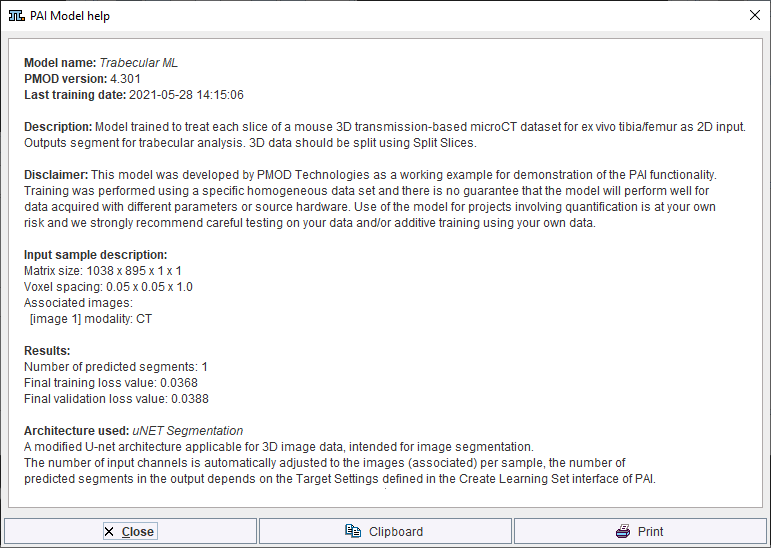

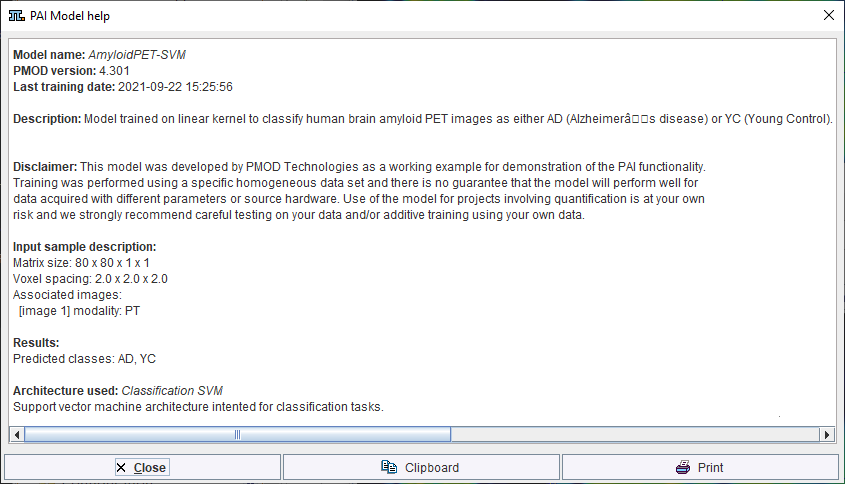

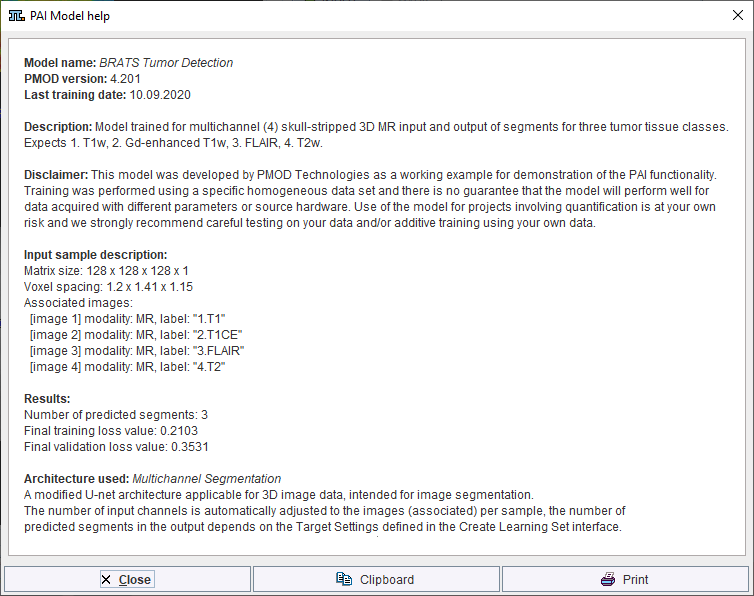

Further information about the trained model selected is available in the model help:

Note that the available text shown is controlled by the user for self-trained models during creation of the Learning Set (and architecture Python files if applicable).

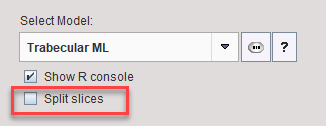

The dimensions of input data to a model for prediction must match the dimensions used for training of the model. The Split Slices and Split Frames options appear below Select Model when the dimensions of the input data for prediction are greater than those used for training. Split Frames reduces 4D data to a series of 3D images to be segmented sequentially. Likewise Split Slices reduces 3D data to a series of 2D images. Following prediction for each split image the segment is rebuilt and Segments/VOIs returned according to the dimensions of the input data. In case Split Frames/Slices are not correctly selected for use of a model, and error will be returned that the dimensions of the input data do not match the data used for training.

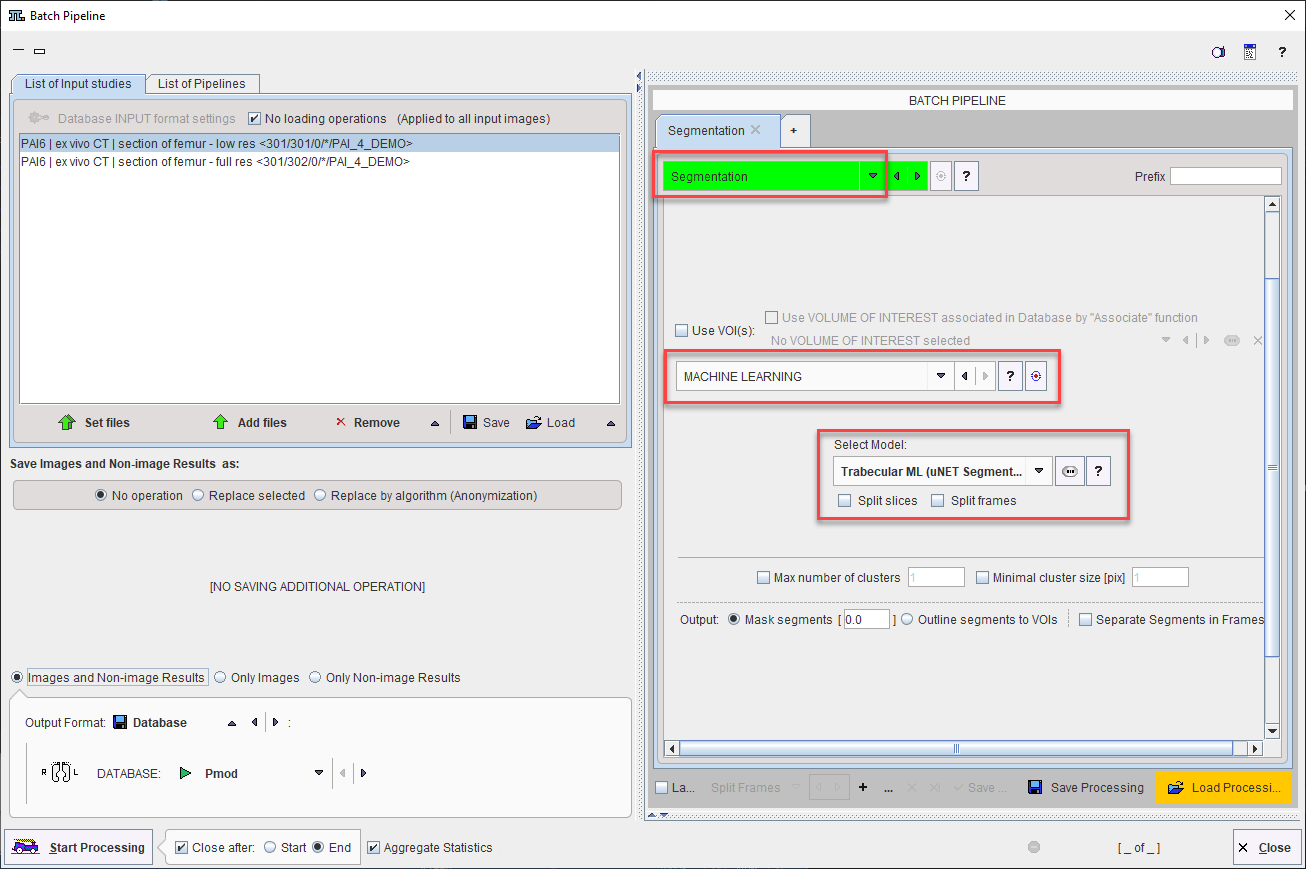

AI-based segmentation in Pipeline Processing

The MACHINE LEARNING segmentation method is also available in the Segmentation image processing tool within Pipeline Processing. This makes batch processing with AI-based segmentation available.

As for interactive segmentation, the model to be used must be selected from the list. Note that Split Slices and Split Frames must be selected appropriately for the model and input data selected.

Note that the options to Use VOIs, control Max number of clusters or Minimal cluster size, and Separate Segments in Frames, are not applicable when MACHINE LEARNING is used.

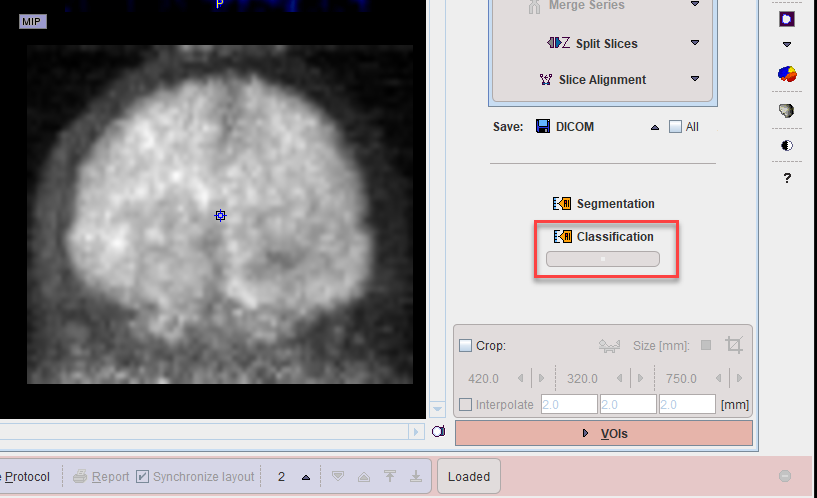

AI-based classification in View tool

A shortcut to AI-based classification is available on the View tab for the data currently loaded.

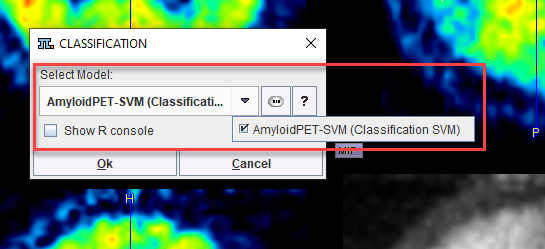

The model to be used must be selected from the menu.

Self-trained models may be selected using the AI Learning Set browser.

Additional information about the model is available using the PAI Model Help button.

Display of the R Console during classification is optional and is toggled using the Show R console checkbox.

The result of classification is shown in tabulated form below the Classification shortcut.

See the Case Study for Human Amyloid PET Classification for further information.

AI-based segmentation in Segment tool

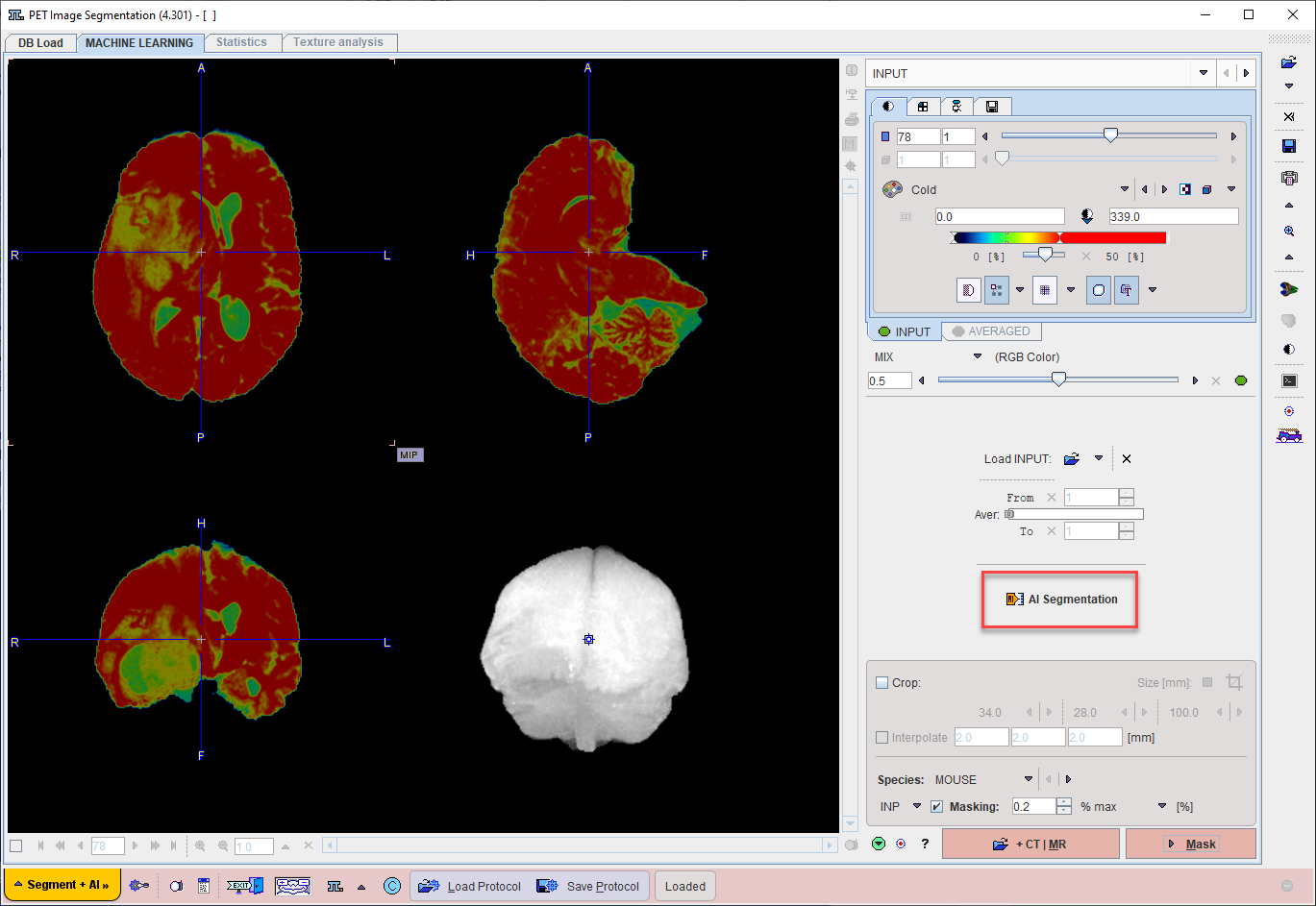

Image Loading

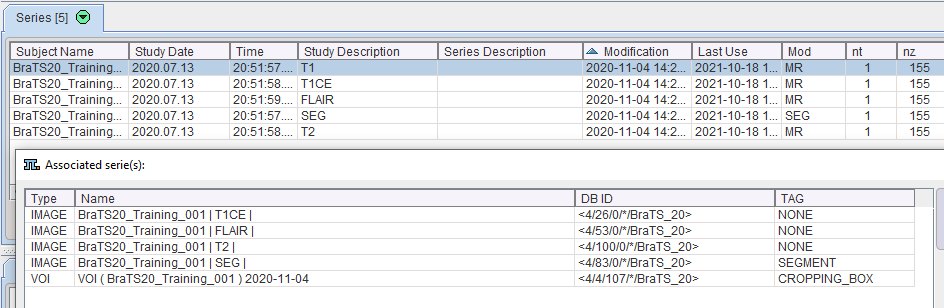

PSEG does not support the loading of multiple input image series. This is not nrecessary for AI-based segmentation. In case of a segmentation requiring multiple input images, use the reference for the association list (e.g. T1 MR for the BraTS Tumor Segmentation case study).

Cropping or interpolation in the lower right are generally not required for the AI-based segmentation workflow. This will be done by the model depending on the preprocessing parameters used in the Learning Set. Cropping may be used when the image field-of-view is clearly larger than the scope of the model. For example, an MR image of the human head including neck may be cropped to brain only for the IXI Parcellation model (human brain MR deep nuclei segmentation, see Case Study). Masking is not required for AI-based segmentation. We recommend using the shortcut AI Segmentation to skip to the next step in the workflow:

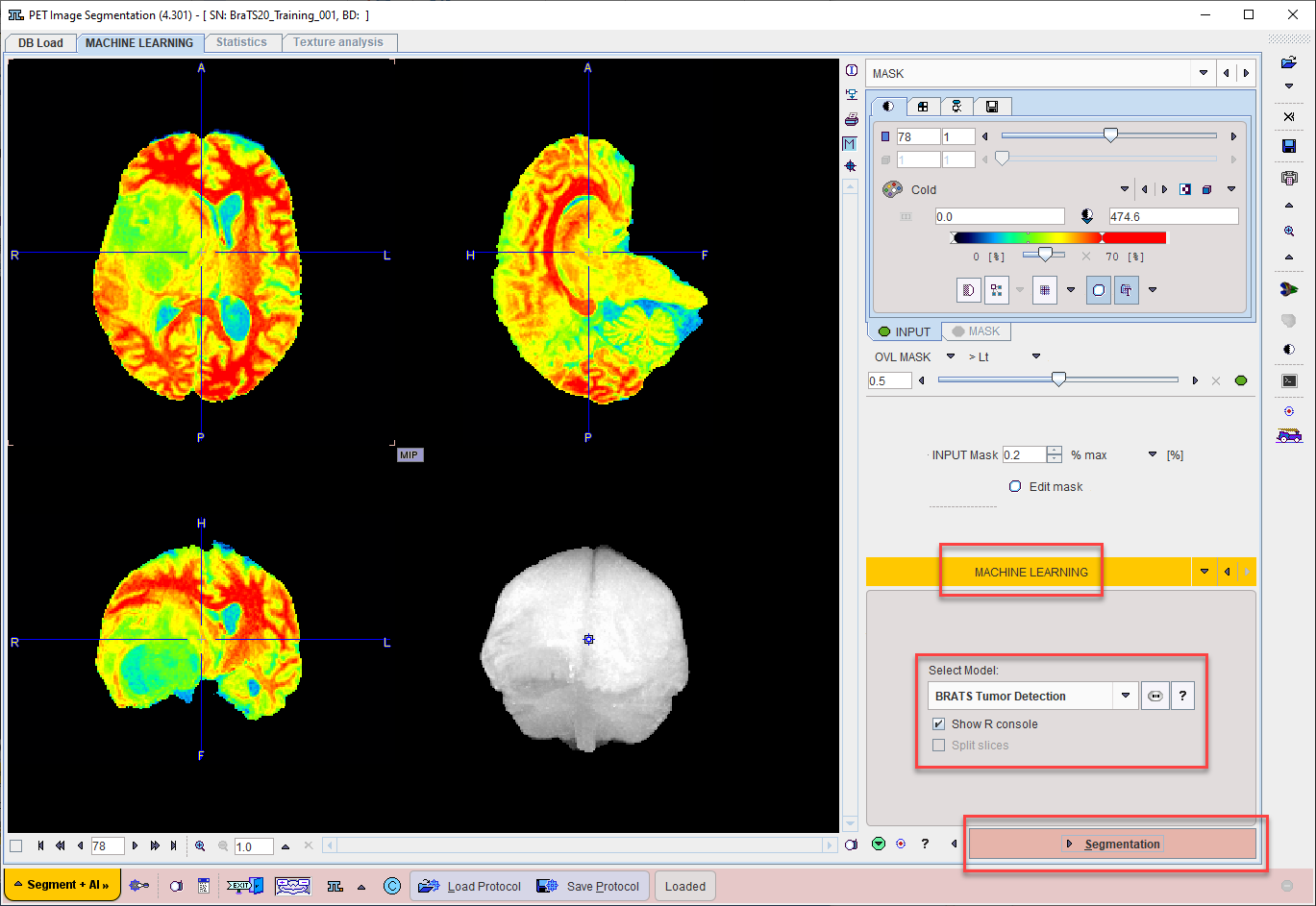

Model Selection

On the MASK page set the segmentation method is set to MACHINE LEARNING.

Choose the appropriate model for the segmentation task using the menu Select Model.

Self-trained models may be selected using the AI Learning Set browser. Note that a description of the model can be retrieved using the PAI Model Help button:

Note that the available text shown is controlled by the user for self-trained models during creation of the Learning Set (and architecture Python files if applicable).

Displaying the R Console during prediction is optional, according to the checkbox selection Show R Console.

The dimensions of input data to a model for prediction must match the dimensions used for training of the model. The Split Slices and Split Frames options appear below Select Model when the dimensions of the input data for prediction are greater than those used for training. Split Frames reduces 4D data to a series of 3D images to be segmented sequentially. Likewise Split Slices reduces 3D data to a series of 2D images. Following prediction for each split image the segment is rebuilt and Segments/VOIs returned according to the dimensions of the input data. In case Split Frames/Slices are not correctly selected for use of a model, and error will be returned that the dimensions of the input data do not match the data used for training.

Segmentation

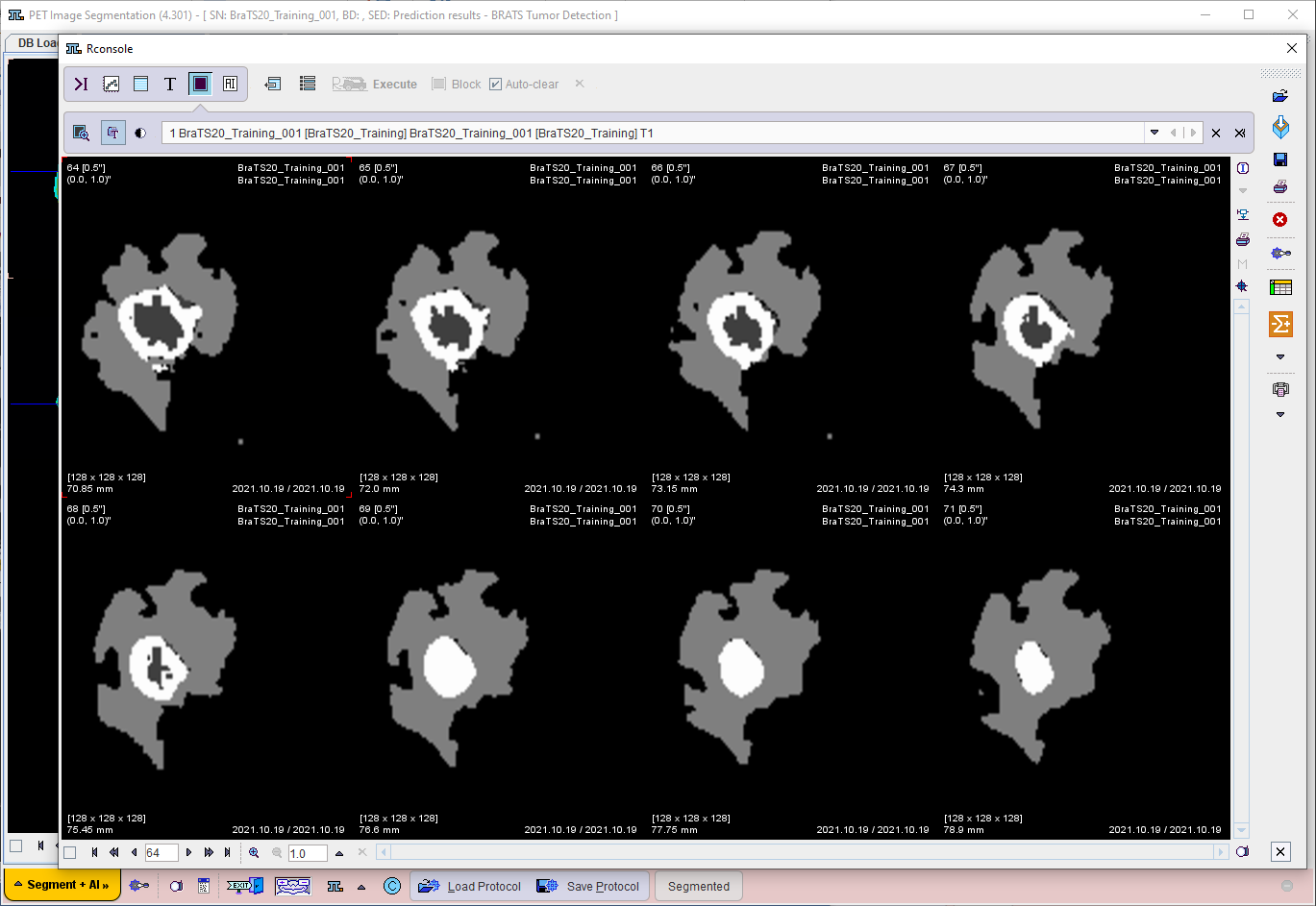

Use the Segmentation workflow button to start prediction. The input data is transferred to the R Console and processed using the selected model. If Show R console was enabled, the resulting label maps are displayed on the image tab of the R Console:

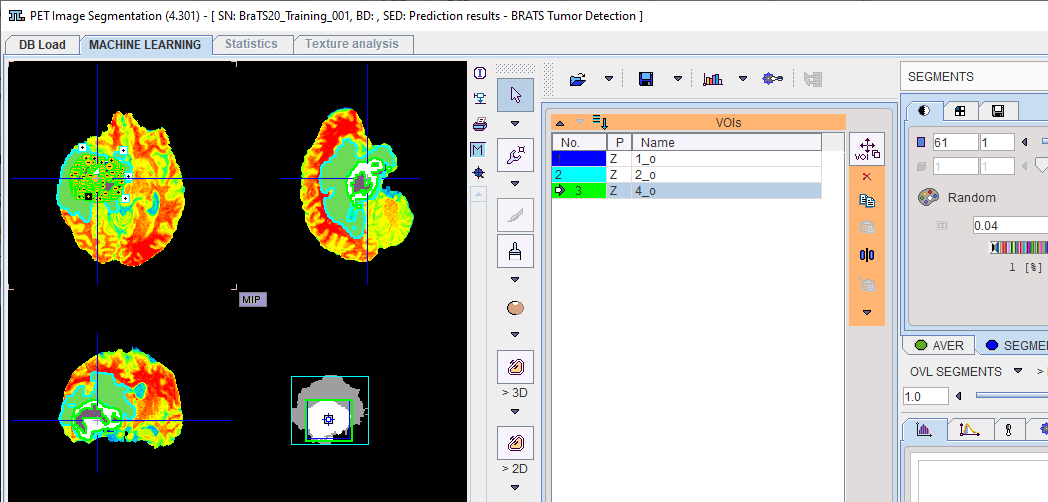

The segmentation result is overlaid on the input series on the SEGMENTS page (once the R Console has been closed).

The segments are automatically converted into numbered VOIs. The interactive VOI labeling function is still available by right-clicking a segment in the image. This opens a dialog window which allows a VOI name to be selected from a list, or simply entered. Note that the VOI name from list functionality is also available via VOI Properties.

The segments label map itself can be saved using the save button in the taskbar to the right.

Please refer to the PSEG User Guide for details of the general segmentation functionality.

At the end of processing the protocol can be saved using Save Protocol. Using Load Protocol the workflow can be retrieved and re-executed. Additionally, the input series can be changed and the protocol used for analysis of the next sample. Protocols also provide access to batch processing.

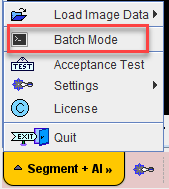

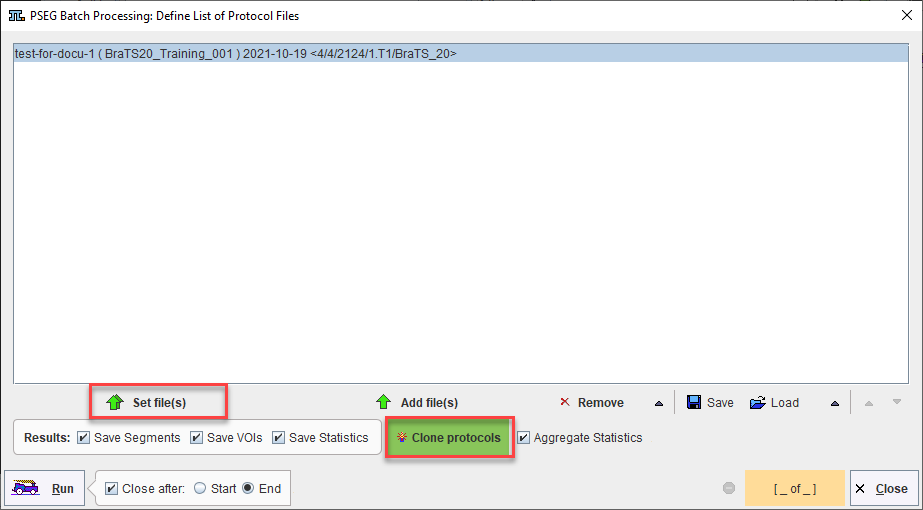

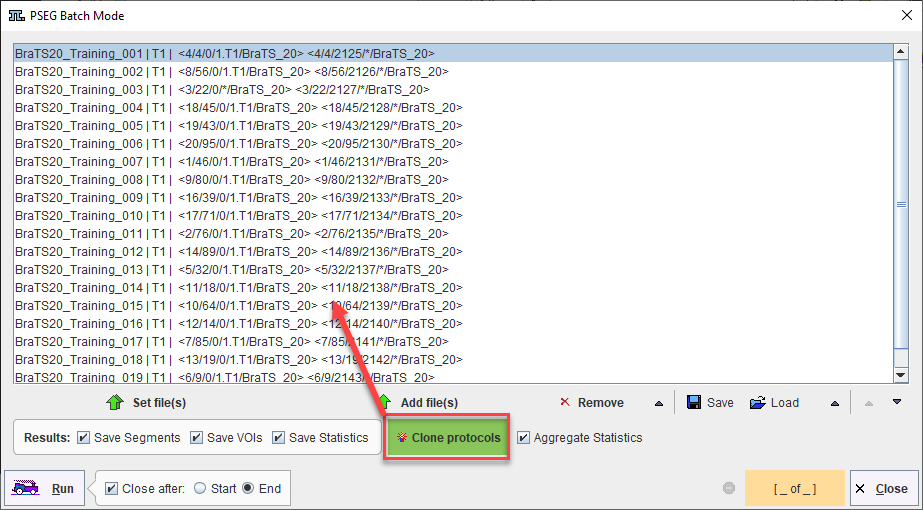

Batch Processing

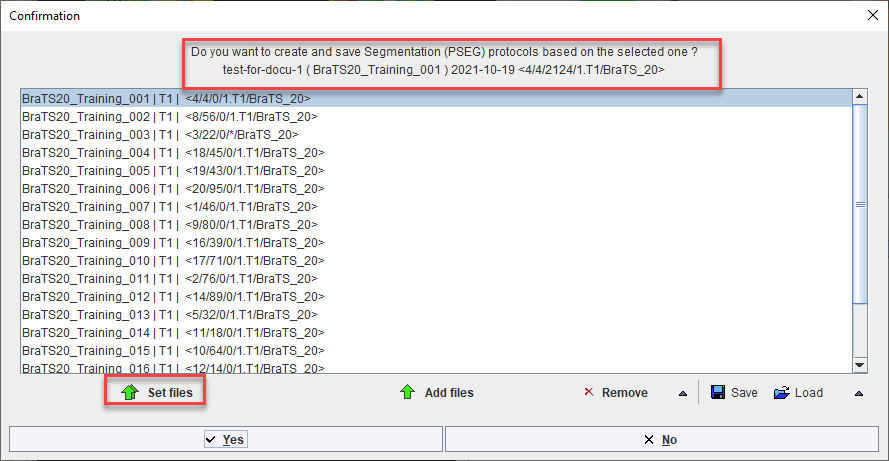

A saved protocol is required to start batch processing. This saved protocol provides the settings that will be used for the workflow across all samples. A set of saved protocols can be loaded using Set Files, or more commonly a single protocol describing the desired workflow can be cloned for all input samples using Clone Protocols.

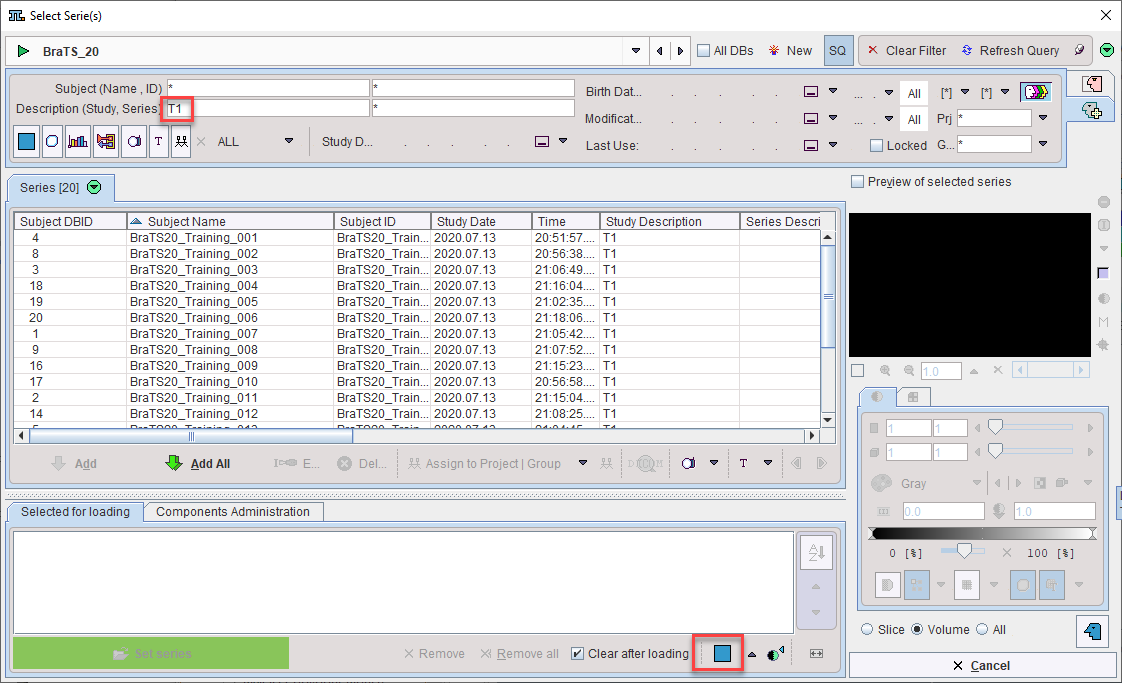

Clone Protocols opens a dialog window in which a list of input samples should be provided using Set Files. In the case of AI segmentation tasks that require multiple input series, the reference for the association list should be selected. PMOD will automatically retrieve the other associated series for processing. The flat view for the database and advanced database queries can be useful to select all input series for batch processing efficiently.

Once the desired list of protocols has been loaded or generated through cloning, batch processing can be launched using the Run button. Results will be saved in the same location as the input data - we strongly recommend using the database.